DeepMind at the forefront

The coverage of AlphaGo, DeepMind’s system that outperformed the performance of professional Go player Lee Sedol, was a turning point. Now there’s AlphaZero, AlphaFold and more. DeepMind made incredible strides demonstrating how AI can be applied to real-world problems. For example, AlphaFold predicted how certain proteins will fold. Knowing the exact shape of certain proteins opened up enormous potential for our deliberations on certain medical treatments.

The Covid-19 vaccine, made with mRNA, is largely based on the shape of the specific ‘spike protein’. The general problem of protein folding was something that people had been concerned with for more than 50 years.

However, DeepMind recently introduced a new “generalist” AI model called Gato. Think of it this way: AlphaGo focuses specifically on the game of Go, AlphaFold focuses on protein folding – these are not generalist AI applications as they focus specifically on a single task. Gato can :

Play Atari video games

caption

chat

Stack blocks with a real robotic arm

In total, Gato was able to complete 604 tasks. This is quite different from more specific AI applications that are trained on specific data to optimize for just one thing.

Is AKI now on the horizon?

To put it bluntly: the full AKI is well above anything that has been achieved so far. It is possible that the path Gato has taken leads on a larger scale to something closer than anything the AKI has achieved. But it is also possible that a larger dimension leads to nothing. AKI may require breakthroughs that are yet to be determined.

People love to ‘get excited’ about AI and its potential. In recent years, the development of OpenAI’s GPT-3 and the image generator DALL-E have been of great importance. Both were great achievements, but neither has resulted in technology leading to human-level understanding. It is also not known whether the approaches used in both could lead to AKI in the future.

If we can’t say when AKI will come, what can we say?

While it’s difficult, if not impossible, to predict big breakthroughs like AKI with certainty, the focus on AI in general has seen an incredible resurgence. The recently released Stanford AI Index report is extremely useful as it sheds light on:

1. The scale of investment flowing into this area. In a sense, investment is a measure of “confidence” because there must be a reasonable belief that productive activity could result from the effort.

2. The scale of AI activity and the fact that activity consistently has better metrics.

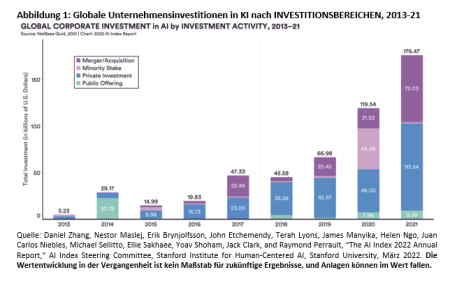

The growth of AI investments

A look at Figure 1 below shows that the investment growth trend is stunning. We know, of course, that this is partly due to the excitement and potential of the AI itself, but also to the general environment. The fact that 2020 and 2021 produced such high numbers could be because capital costs were minimal and money was chasing exciting stories with potential profits. Based on current knowledge, it is difficult to predict whether the figure for 2022 will be higher than that for 2021.

It is also interesting to take a look at the development of the investment components:

The year 2014 was defined by the “public supply”, which was generally less extensive in the other years compared to the totals.

The main driver of steady investment growth has been on the private side, so Figure 1 clearly shows the cyclical upswing in private investment, which admittedly will not necessarily show a straight-line uptrend into the 2020s.

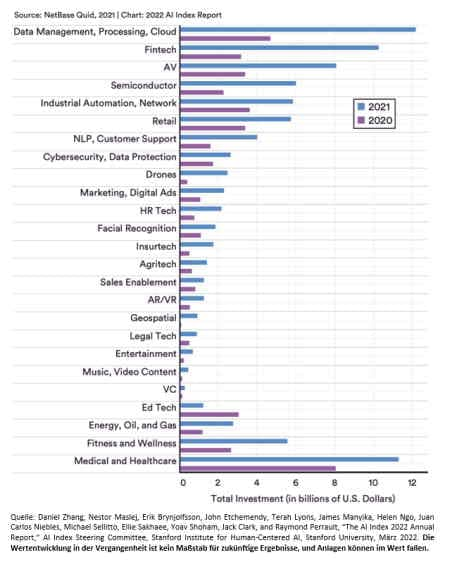

Which activities are financed with the money?

The total investment sums are one thing, it becomes more concrete when you look at the individual areas of activity. Figure 2 below is helpful in this regard and gives a sense of the changes in 2021 compared to 2020.

In 2021, ‘Data Management, Processing, Cloud’, ‘Fintech’ and ‘Medical and Healthcare’ will lead the way with over $10 billion each.

It is notable that in the 2020 data (purple) the Medical & Healthcare sector tops the list with around $8 billion. The relative increase compared to the previous year in “Data management, processing, cloud” and “Fintech” is thus even clearer.

Figure 2: Private investment in AI by focus area, 2020 vs. 2021

Is the AI getting better technically?

This is an intriguing question, the answer to which could be almost infinitely profound and could be the subject of an infinity of scientific papers. We can see here that these are two distinct efforts:

1. Design, code or otherwise create the specific AI implementation.

2. We need to figure out how best to test whether it’s actually doing what it’s supposed to do, or whether it’s improving over time.

I find the “semantic segmentation” particularly interesting. This sounds like something only an academic would ever say, but it relates to the concept that in a picture you see a person riding a bicycle. You want the AI to be able to tell which pixels represent the person and which pixels represent the bike.

If you think it doesn’t matter if a sophisticated AI can tell the person from the bike in such an image, then I’ll concede that this might not be the highest quality application. However, imagine an image of an internal organ on a medical image – now think of the value of segmenting healthy tissue versus a tumor or lesion. Can you imagine what value that could have?

The Stanford AI Index report breaks down specific tests designed to measure the progress of AI models in areas like these:

computer vision

language

language

recommendations

Reinforcement Learning

hardware training times

robotics

Many of these areas approximate what might be termed “human standards,” but it is also important to note that most of them specialize only in the one particular task for which they were designed.

Conclusion: It is still too early for AI

With certain megatrends, it’s important to have the humility to admit that we don’t know for sure what’s going to happen next. With AI we can predict certain innovations, whether in machine vision, autonomous vehicles or drones, but we also need to recognize that the greatest gains may come from activities we are not yet pursuing.

Stay tuned for “Part 2” in which we discuss recent results from specific companies in this space.

This material was prepared by WisdomTree and its affiliates and is not intended to be relied upon as forecast, research or investment advice. In addition, it does not constitute a recommendation, offer or solicitation to buy or sell any security or to adopt any investment strategy. Opinions expressed are as of the date of manufacture and are subject to change depending on the terms below. The information and opinions contained in this material are derived from proprietary and non-proprietary sources. Therefore, WisdomTree and its affiliates, their employees, officers or agents do not assume any liability for its accuracy or reliability, nor for any errors or omissions otherwise occurring (including liability to any person for negligence). Use of the information contained in this material is at the reader’s sole discretion. Increases in value in the past do not allow any conclusions to be drawn about future results.

1: Source: https://www.cdc.gov/coronavirus/2019-ncov/vaccines/different-vaccines/mrna.html#:~:text=The%20Pfizer%2DBioNTech%20and%20Moderna,use%20in%20the %20United%20States.

2: Source: Heikkila, Melissa. “The hype around DeepMinds new AI models misses whats actually cool about it.” MIT Technology Review. 23 May 2022.

WisdomTree insights

Image sources: WisdomTree, WisdomTree